I drove 3 hours round trip to get the new MacBook Air the day after I ordered it. It was either that or wait 3–4 weeks for delivery. I don’t know about you, but I was too excited to start playing with this new laptop and putting it through its paces. I have had several MacBooks over the years. Being a contractor (programmer) I have worked on fully loaded versions of 2017, 2018, and most recently the 2019 MacBook Pro with an i9 and 32GB of ram.

Like many people, I have been intrigued by the performance claims and glowing reviews for the new Apple M1 chip along with the outlandish battery life. That got elevated to new levels of hype watching different YouTube videos of the MacBook Air being able to drive five 1080p and 4K monitors on the new Apple integrated graphics built into the M1 SoC (system on a chip) which includes the processor, ram, gpu, and other Apple hardware features all in one package.

When I strolled up to the window at the Apple store the salesperson was excited to give me the new MacBook Air (16GB with 1TB SSD) and tell me about how incredible the performance was. He said that he and the other employees had been throwing 4K video editing content and other stress tests at the MacBook for hours and hadn’t been able to crash it or cause the computer to become unresponsive. I took that as a challenge to see if I could max out the new M1 SoC with my day-to-day work-flow. I wasn’t surprised when I was able to do exactly that.

Recently, in another one of my posts, I decided to try and build a high-performance image hosting service. The basic premise is that I need to be able to load thousands of photos (thumbnails) almost instantly. I don’t want to wait for buffering, loading screens, or downloads. See my article below if you are interested in reading more about that project.

One of the tasks that I mention in my previous article is that I have to process images and reduce their size by scaling down each one into a thumbnail of 250x250 instead of loading the full resolution image. This reduces the image size roughly by 1/10th of the original size and allows me to load thousands of image files seemingly instantly without loading screens. I am able to do this by writing this lazy Node.js code.

const { Image } = require('image-js');

const fs = require('fs');

// Grab all the thumbnails at once async

fs.readdir('./large-batch-images', async (err, files) => {

// go through each file in the array and asynchronously write it to the SSD

files.forEach(async (file) => {

if(file.includes('.jpg')) {

// read the file from the SSD

const image = await Image.load(`./large-batch-images/${file}`);

// scale the image down to 250x250

const resized = image.resize({ width: 250, height: 250 });

// write the file to the SSD

return await resized.save(`./thumbnails-large-250/${file}`);

}

return;

});

}Any astute Node.js developer will probably say “that isn’t a very efficient script” — and you would be right. That is the point in testing out the performance of the new 8-core M1 SoC and 16 GB of ram. I wrote something that I would generally do in a hurry to batch process large amounts of data within a reasonable amount of time. If the code isn’t performant enough for what I am doing then I would begin to optimize it for my needs. It reminded me of one of the old adages one of my professors would always say “Who cares if you write a poorly performing program. In 2 years it will run twice as fast when the next generation processors come out.” He is definitely right when it comes to server-side code, but in the front-end space, we have to be as efficient as possible to prevent terrible user experiences like unresponsive pages.

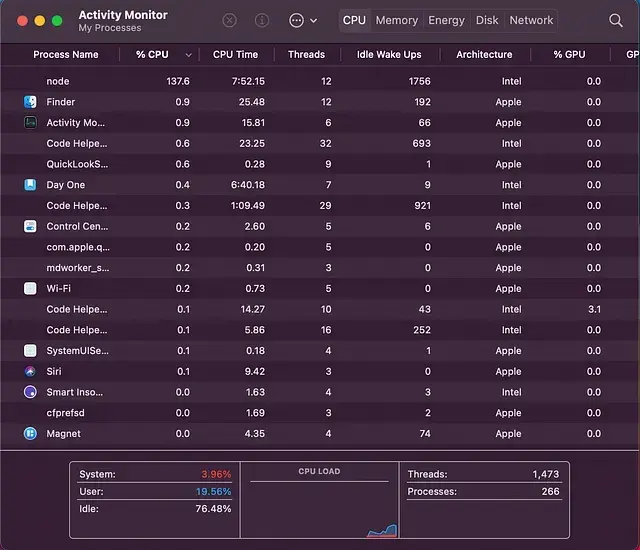

For this stress test, I was processing about 7,000 images at once asynchronously with Node.js and converting them to 250x250, and writing them to the SSD.

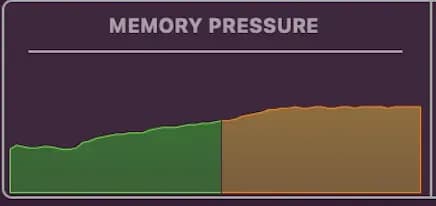

You will see below that the new MacBook did fairly well. It made it to about 5,000 images before the performance began to drop exponentially.

(Lazy) CPU 137% Utilization and (Lazy) Memory 19.54 GB Utilization

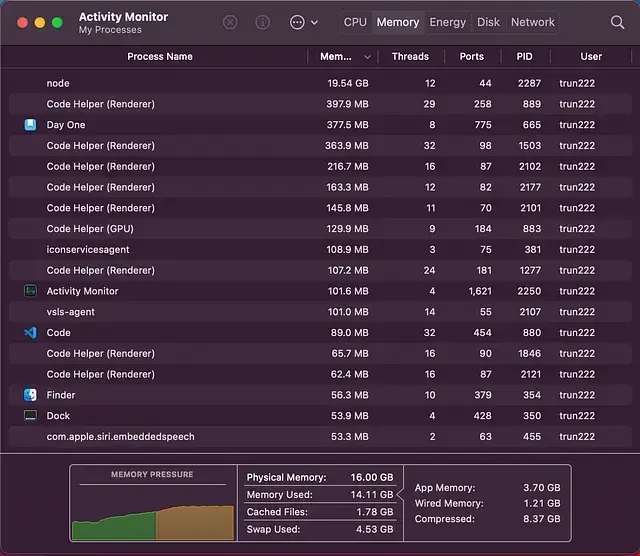

At about, ~5,500 images processed the ram was completely maxed out and Mac OS X began taking up my SSD as additional swap space in lieu of the memory. You will also notice that the Node.js script ran at around 137% CPU utilization which is also interesting.

For the sake of this little experiment and because I actually wanted to process all ~7,000 images I decided to refactor the code a tad to see what the performance implications were.

import fs from 'fs';

import {encodeJpeg, loadBinary} from 'image-js';

// Read file names synchronously

const files = fs.readdirSync('./large-batch-images');

// Resize and write to disk

files.forEach(async (file) => {

if(file.includes('.jpg')) {

// read the file from the SSD

const imageFile = fs.readFileSync(`./large-batch-images/${file}`);

// initialize new image object

const image = loadBinary(imageFile);

// scale the image down to 250x250

const resized = image.resize({ width: 250, height: 250 });

// write the file to the SSD

fs.writeFileSync(`./thumbnails-large-250/${file}`, encodeJpeg(resized));

}

});I had to rewrite some of the library code that I used for the image processing in the Lazy script because it was all written with promises and therefore was asynchronous. An interesting gotcha in Node.js is that it is generally considered best practice to always write your code asynchronously. That is generally true as it normally improves performance by not blocking I/O and other code execution. In the case of batch processing of data that assumption and rule of thumb is incorrect. Unless of course, you have tons of ram and processing power. Then by all means.

The reason this is the case is that when Node.js is processing thousands of files at once asynchronously many files are being opened and processed all at once before the previously opened files have had the chance to close and be freed by garbage collection. This overwhelms the garbage collection in Node.js and ultimately the amount of memory being used grows exponentially until you run out or the Node.js process crashes. However, conversely when everything is synchronous and the commands are executed one after another instead of simultaneously there is plenty of time for the garbage collection to free up resources before new image files are opened and processed. You will notice these trends in the screenshots I supplied from my tests below.

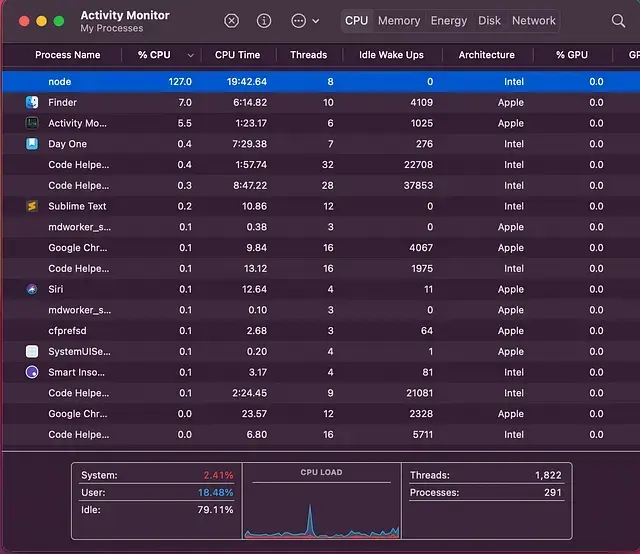

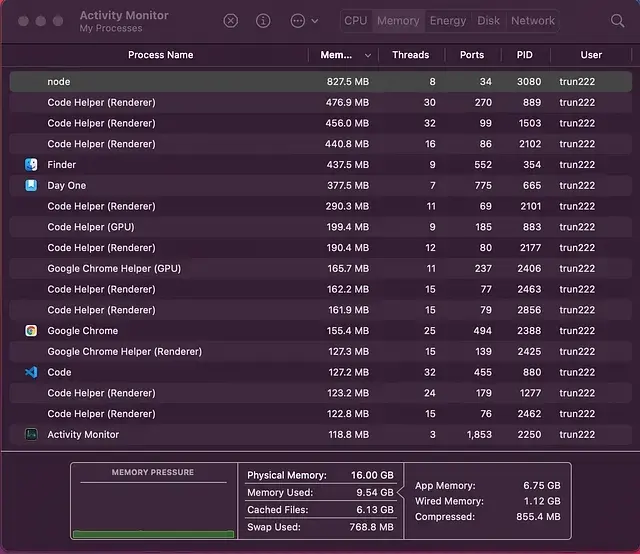

(Improved) CPU 127.0% Utilization and (Improved) Memory 827.5 MB

In closing, you will notice that the improved script’s CPU utilization was about the same as the lazy script. However, what is dramatically better is memory utilization, it stayed right at or below 1 GB for the entire duration of the image processing. I will note that as I expected, the synchronous approach is a lot slower but more stable on a machine with less ram. I estimate that the lazy script took about 20 minutes to get through ~5,000 images when the Node process became unstable and started writing to swap (the SSD). Whereas the improved script took somewhere around 35 minutes to get to that same number of images and barely used the swap.

Hopefully, you enjoyed this fun example of how batched asynchronous processing can get you into trouble on a computer with only 16 GB of ram. It turns out the new Macbook Air with an M1 processor and 16 GB of ram is still mortal after all.

*In the future it may be fun to see if I could force garbage collection in the asynchronous code or limit the number of simultaneous asynchronous calls being spawned so that the max amount of system ram is never exceeded.

Update: Taking a recommendation from Ray Walker. I was able to easily write a final script to process the images that took less than 1 minute of execution time without maxing out the new Macbook Air’s RAM or CPU. The RAM used by the Node process stayed below 700 mb during execution.

const { Image } = require('image-js');

const fs = require('fs');

const PromisePool = require('@supercharge/promise-pool');

// Grab all the thumbnails at once async

fs.readdir('./images', async (err, files) => {

const { results, errors } = await PromisePool

.for(files)

.withConcurrency(20)

.process(async file => {

if (file.includes('.jpg')) {

let image = await Image.load(`./images/${file}`);

let resized = image.resize({ width: 250 });

return resized.save(`./test/${file}`);

}

return;

});

});